In one of my previous posts I’ve noted that the GCP Cloud SQL for SQL Server doesn’t have point of time recovery as of March 2022. As result the default out of box backups can only provide RPO as 24 hours or more. The exact RPO might vary from day to day since you can only specify a window for backup but not exact time. So far it seems like the only reasonable approach to reduce the RPO is to schedule on-demand backups, and in this post I am going to show how you can do that using a couple of different approaches.

The first approach would be using only Google Cloud Scheduler and an HTTP request to the GCP REST API. It is the simplest way and it doesn’t require too much effort.

I assume you have already enabled the API for Cloud Scheduler. The next step is to set up a service account for execution. Your service account should have permissions to run Cloud SQL backups in the project. The permissions are “cloudsql.backupRuns.*”. I’ve created an account “us-ce1-k8s-sandbox” and provided role “cloudsql.editor”.

user@51f3fd276c2b:/# gcloud iam service-accounts create us-ce1-k8s-sandbox Created service account [us-ce1-k8s-sandbox]. user@51f3fd276c2b:/#gcloud projects add-iam-policy-binding gleb-sandbox --member="serviceAccount:us-ce1-k8s-sandbox@gleb-sandbox.iam.gserviceaccount.com" --role="roles/cloudsql.editor"

IYou can improve it by providing a more granular approach to roles and use a custom role with only required permissions for the backup service account instead using one of the predefined roles.

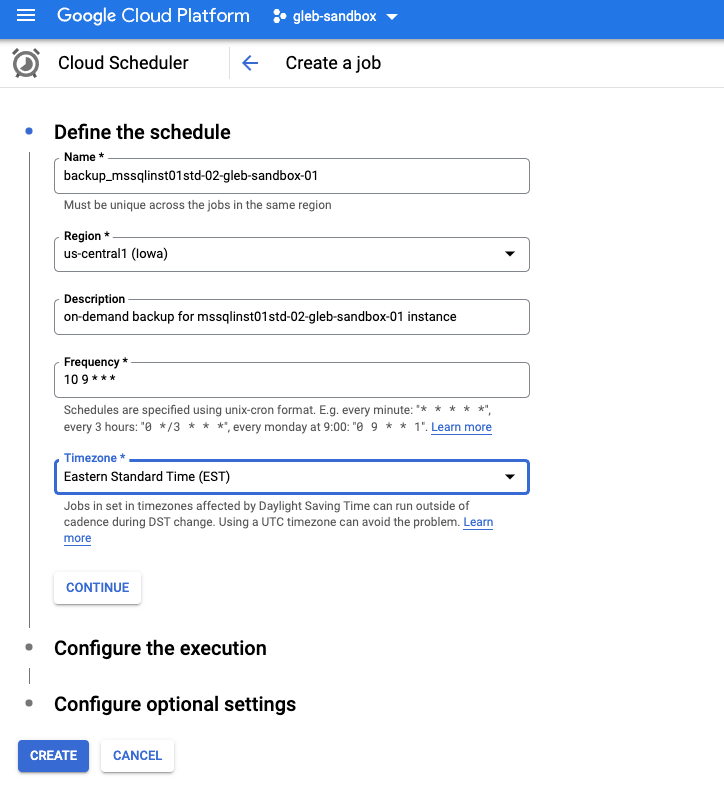

The next step is to create the schedule and for that step we need to define several parameters:

- Region – “us-central1” in my case

- Instance name – “mssqlinst01std-02-gleb-sandbox-01″ is my test instance

- Schedule in cron format – “10 9 * * *” is translated as every day at 9:10 am

- Timezone – “America/Toronto” – is my local timezone

Having all those parameters we can now setup the scheduler either in the GUI console :

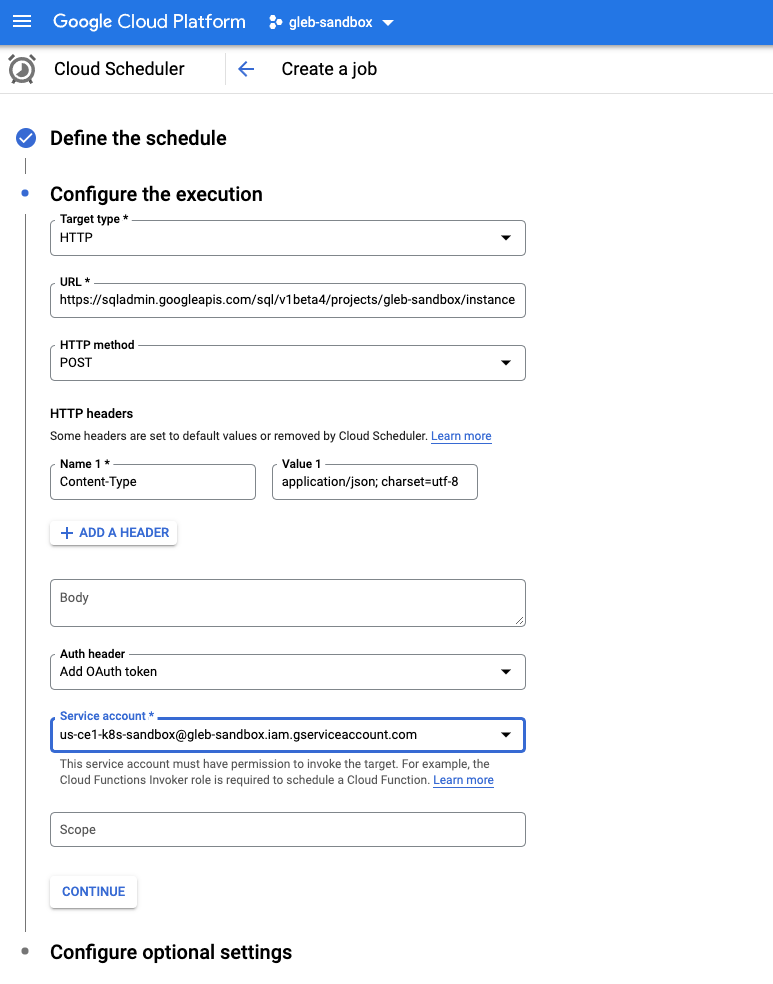

Push “Continue” and supply the next portion of the values on the next screen:

- Target type – “HTTP“

- URL – “https://sqladmin.googleapis.com/sql/v1beta4/projects/gleb-sandbox/instances/mssqlinst01std-02-gleb-sandbox-01/backupRuns” – the URL has format “https://sqladmin.googleapis.com/sql/v1beta4/projects/{project id}/instances/{instance name}/backupRuns“

- HTTP method – “POST” in our case

And that’s it. You can do the same using the gcloud command:

gcloud scheduler jobs create http backup_mssqlinst01std-02-gleb-sandbox-01 --location=us-central1 --schedule='10 9 * * *' --uri=https://sqladmin.googleapis.com/sql/v1beta4/projects/gleb-sandbox/instances/mssqlinst01std-02-gleb-sandbox-01/backupRuns --oauth-service-account-email='us-ce1-k8s-sandbox@gleb-sandbox.iam.gserviceaccount.com' --description='on-demand backup for mssqlinst01std-02-gleb-sandbox-01 instance' --time-zone='America/Toronto'

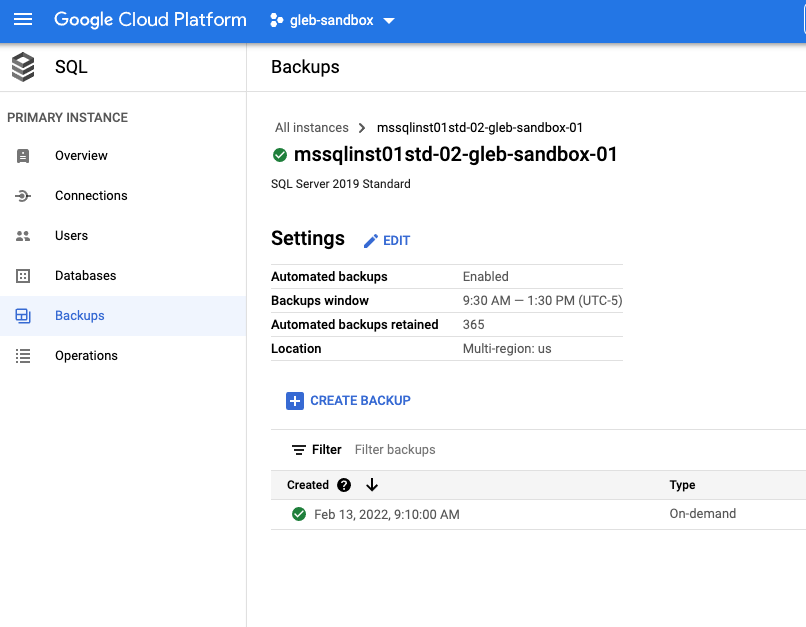

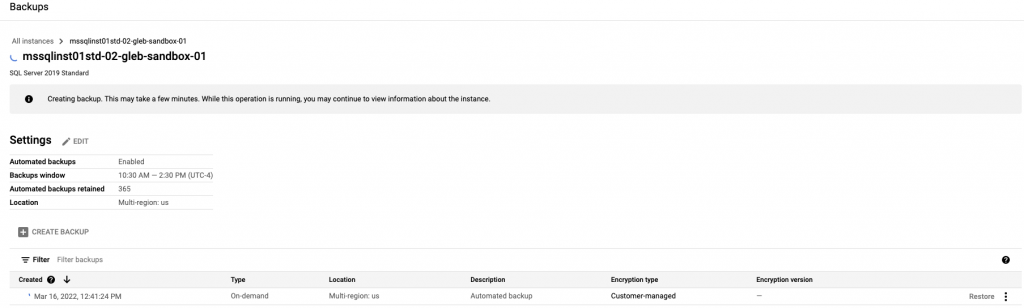

If we have a look at the instance backups page in the scheduled time we can see the backup is being executed.

The method is simple and easy to implement but maybe not flexible enough and doesn’t allow adding additional parameters.

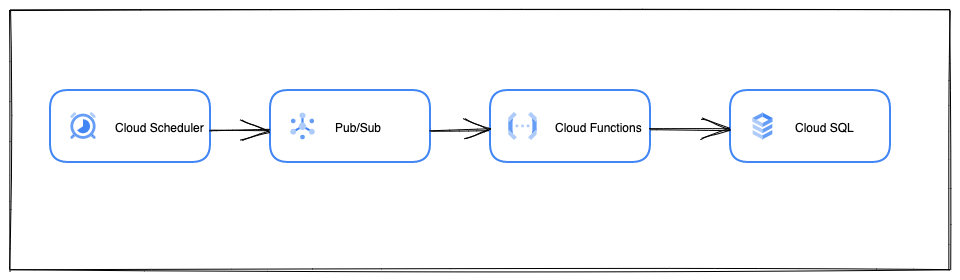

The second approach includes more components and requires more effort but if you want to be able to improve the workflow and add additional conditions it might be more attractive. For the second case we use GCP Scheduler, Pub/Sub and Functions. Here is a simple diagram showing the process:

First, we need to enable APIs for Pub/Sub, Functions and Scheduler. Then we need to create the service account to execute the backup. We can use the same account as in the previous example.

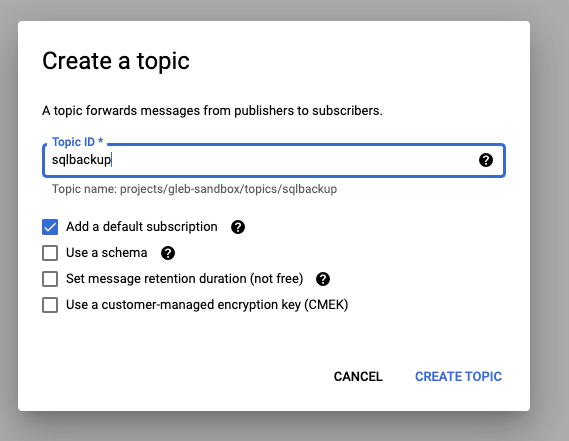

Then we need to create a Pub/Sub topic. I’ve created a topic with the name “sqlbackup”.

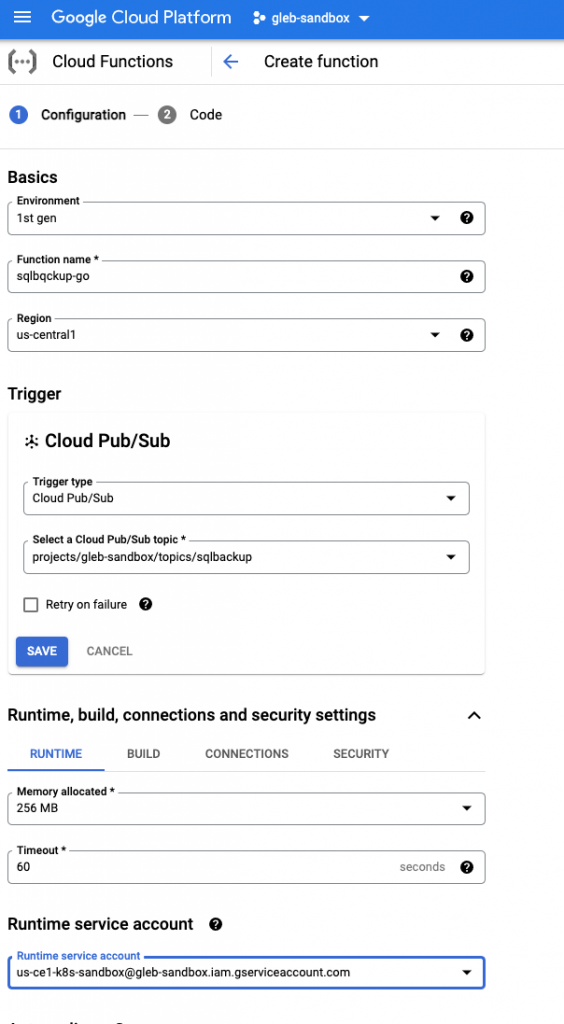

We need a function which will be triggered by messages published to the topic. I’ve written a sample function taking a backup using parameters from a Pub/Sub message published to the topic. And the function is using the google service account I’ve created earlier.

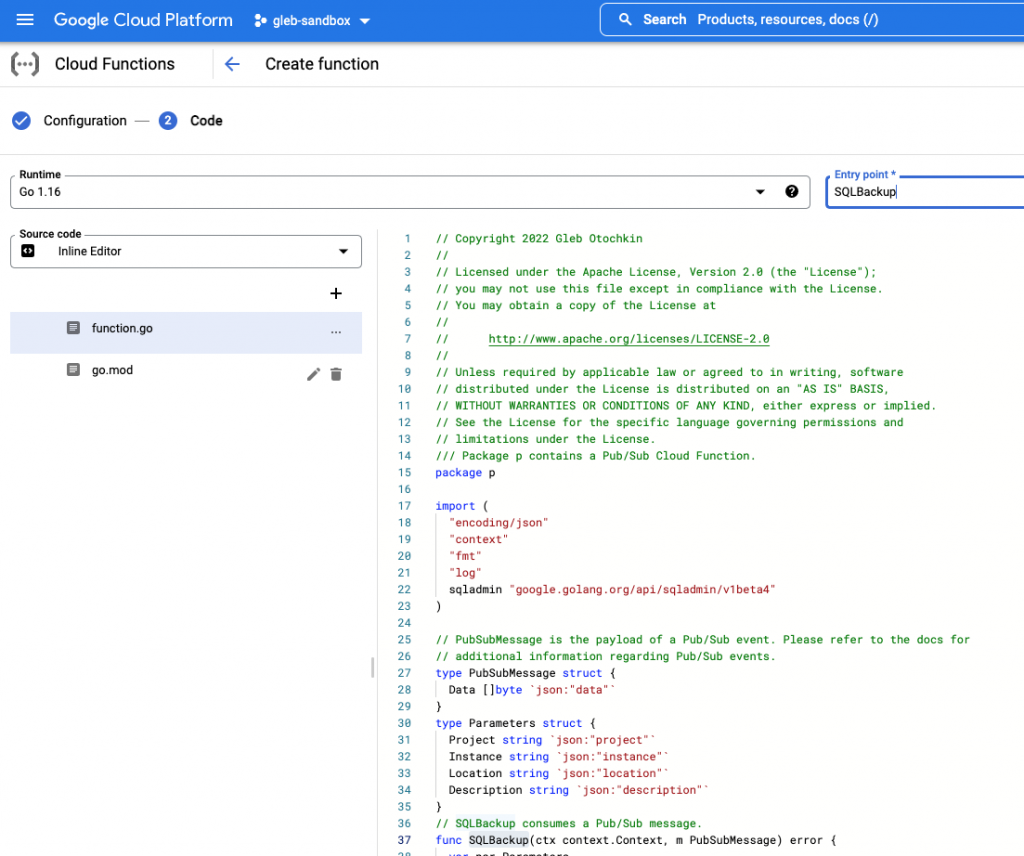

On the second tab we publish the code and define the name of the function to be called. I’ve created the function using Go language and the GCP clients libraries.

You can find full source code for the function in my Github repository.

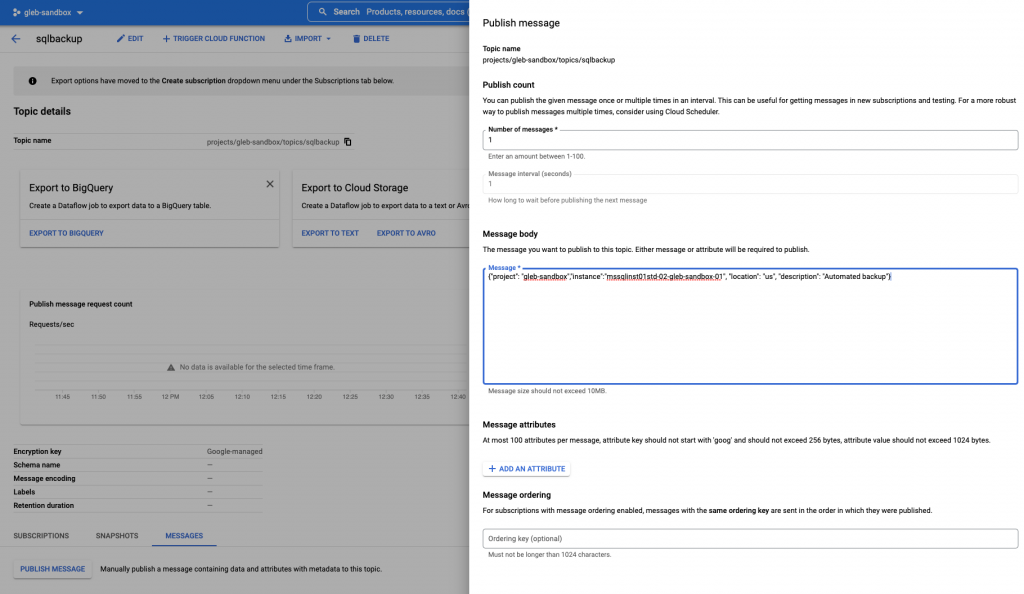

After deploying the function we can test it by publishing a message with project, instance name, backup location and description to our “sqlbackup” topic.

Here is the full text of the message:

{"project": "gleb-sandbox","instance":"mssqlinst01std-02-gleb-sandbox-01", "location": "us", "description": "Automated backup"}

And after publishing the message we can see that the backup is indeed running.

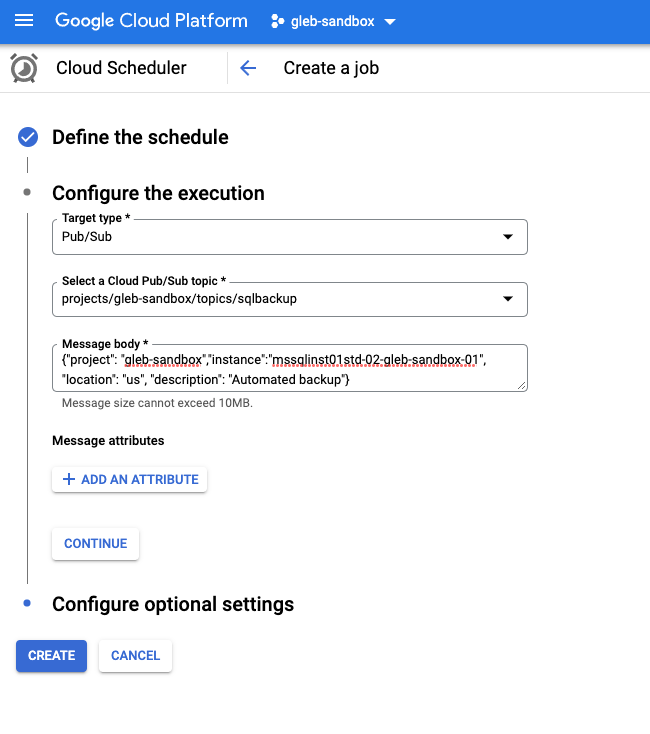

Now we need only schedule the publishing of our message to the Pub/Sub topic in the GCP Scheduler. The first screen will be the same as for the first approach but on the second screen we need to specify different parameters for the scheduler:

- Target type – Pub/Sub

- Topic – “project/{my-project}/topics/sqlbackup”

- Message body – our message body we use to initiate the backup with the backup parameters.

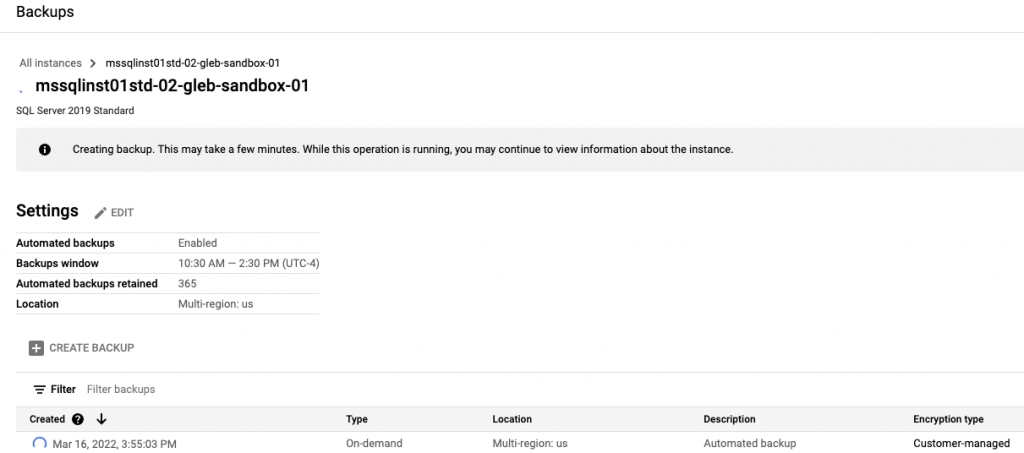

At the scheduled time we can see our backup to be executed.

I hope the post can help to build the reliable backups and serve as a starting point for some more advanced cases. For example I can see that we need to schedule deletion of old backups based on our retention policy and maybe some other tasks to be included. Happy coding.