The blog was supposed to be a small how-to but it has grown to a bigger one and hopefully might help to avoid some minor problems while exporting data from an Autonomous Database (ADB) in Oracle Cloud (OCI). It is about exporting data to the Oracle DataPump format to move data to another database or as a logical “backup”.

Oracle documentation provides sufficient information but I find it more and more difficult to navigate considering the number of options and flavours for Oracle databases. There are some new ways and tools around Oracle OCI ATP which can help in some cases. If you want you can jump directly to the end to read the summary.

The first thing we need is to choose where we want to put our dump file. We have an option to export it to the internal Oracle directory or directly to the object storage. When we export data to the internal directory you can use normal DataPump API and tools like expdp. Last version of the Oracle SQLcl tool supports DataPump too but I was not successful trying to export data directly to the OCI object storage bucket.

Let’s start from exporting to an Oracle directory. You can use either the default “DATA_PUMP_DIR” or create your own. Behind the scenes it is going to use the same DBFS, so the difference is only in permissions to the directory.

SQL> create directory data_export_dir as 'app_export'; Directory DATA_EXPORT_DIR created. SQL> select * from dba_directories; OWNER DIRECTORY_NAME DIRECTORY_PATH ORIGIN_CON_ID ________ ___________________________ _______________________________________________________________________________________________ ________________ SYS HO_TEMPLATES_CONF_DIR /u02/nfsad1/gateway_nfs/template_conf_files 1 ... redacted ... SYS OPATCH_LOG_DIR /u02/app/oracle/homes/OraHome1/rdbms/log 1 SYS JAVA$JOX$CUJS$DIRECTORY$ /u02/app/oracle/product/21.1.0.0/dbhome_1/javavm/admin/ 1 SYS DATA_EXPORT_DIR /u03/dbfs/DC7C912B04CD03F0E0533D11000AD61D/data/app_export 667 SYS DATA_PUMP_DIR /u03/dbfs/DC7C912B04CD03F0E0533D11000AD61D/data/dpdump 667 SYS SQL_TCB_DIR /u03/dbfs/DC7C912B04CD03F0E0533D11000AD61D/data/tcb_dir 667 SYS SCHEDULER$_LOG_DIR /u03/dbfs/DC7C912B04CD03F0E0533D11000AD61D/data//u01/app/oracle/homes/OraHome1/scheduler/log 667 22 rows selected. SQL> SELECT * FROM DBMS_CLOUD.LIST_FILES('DATA_PUMP_DIR'); OBJECT_NAME BYTES CHECKSUM CREATED LAST_MODIFIED ______________________ ________ ___________ __________________________________ __________________________________ load_OML4PY_140.log 344 22-04-12 21:56:58,894529000 GMT 22-04-12 21:56:59,390757000 GMT load_OML4PY_136.log 344 22-04-12 21:55:37,047885000 GMT 22-04-12 21:55:38,059632000 GMT SQL>

It is using DBFS in the DATA tablespace and you can list the files using DBMS_CLOUD.LIST_FILES procedure.

The export itself is not too much different than for any on-prem or other databases but keep in mind that it will use your DATA space. So, if you, for example, do it on your free tier or exporting a huge dataset it might hit a problem with space.

[opc@gleb-bastion-us ~]$ expdp admin/********#@glebatp02_tp tables=TITLE_EPISODE dumpfile=title_episode.dmp encryption_pwd_prompt=yes logfile=export.log directory=data_pump_dir EXCLUDE=statistics,index Export: Release 21.0.0.0.0 - Production on Wed Apr 13 14:43:10 2022 Version 21.3.0.0.0 Copyright (c) 1982, 2021, Oracle and/or its affiliates. All rights reserved. Connected to: Oracle Database 21c Enterprise Edition Release 21.0.0.0.0 - Production Encryption Password: Starting "ADMIN"."SYS_EXPORT_TABLE_01": admin/********@glebatp02_tp tables=TITLE_EPISODE dumpfile=title_episode.dmp encryption_pwd_prompt=yes logfile=export.log directory=data_pump_dir EXCLUDE=statistics,index Processing object type TABLE_EXPORT/TABLE/TABLE_DATA Processing object type TABLE_EXPORT/TABLE/TABLE Processing object type TABLE_EXPORT/TABLE/CONSTRAINT/CONSTRAINT . . exported "ADMIN"."TITLE_EPISODE" 1.395 GB 6635610 rows Master table "ADMIN"."SYS_EXPORT_TABLE_01" successfully loaded/unloaded ****************************************************************************** Dump file set for ADMIN.SYS_EXPORT_TABLE_01 is: /u03/dbfs/DC7C912B04CD03F0E0533D11000AD61D/data/dpdump/title_episode.dmp Job "ADMIN"."SYS_EXPORT_TABLE_01" successfully completed at Wed Apr 13 15:02:40 2022 elapsed 0 00:14:45 [opc@gleb-bastion-us ~]$

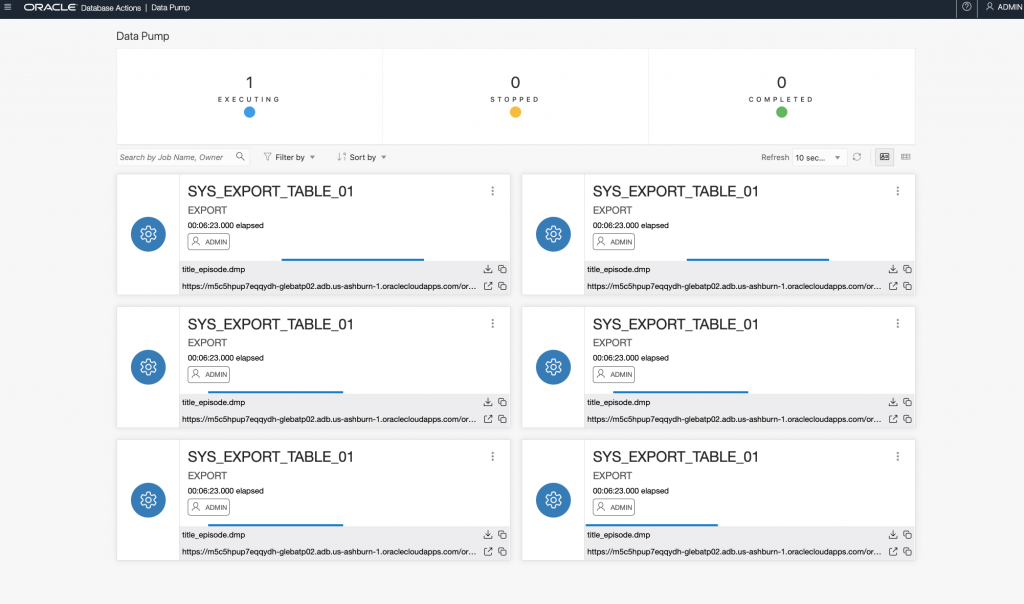

While you are executing you can see the export executing on the “Database Actions”->”Data Pump” page. And funny enough you can see 16 or 18 executions even if you run one non-parallel export.

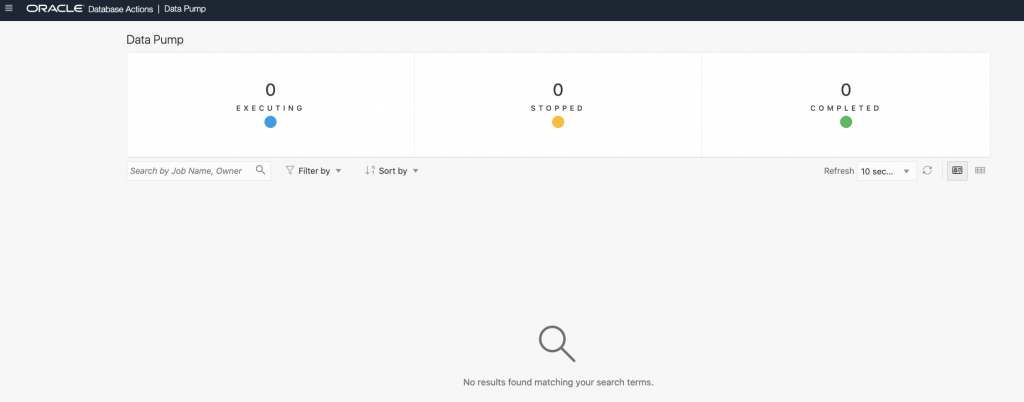

The page doesn’t allow you to start or stop or configure a new export but it gives you the ability to see the log or download dump file. Or rather suppose to give because as soon as your export is completed you cannot do that, the tab doesn’t show any exports anymore.

From the Oracle directory you can copy the data to a storage bucket of your choice. We can use the DBMS_CLOUD.PUT_OBJECT procedure. To do so we need to create credentials to access the storage. Here is the trick. The Autonomous on the shared and dedicated exadata have different documentation pages and while you can use DBMS_CREDENTIAL.CREATE_CREDENTIAL on the dedicated exadata according to the docs you have to use DBMS_CLOUD.CREATE_CREDENTIAL on the shared exadata. If you try to use DBMS_CREDENTIAL on the shared exadata you are getting the error:

BEGIN DBMS_CREDENTIAL.CREATE_CREDENTIAL( credential_name => 'DEF_CRED_NAME', username => 'myuser', password => 'authstring ...' ); END; Error report - ORA-27486: insufficient privileges ORA-06512: at "SYS.DBMS_ISCHED", line 2459 ORA-06512: at "SYS.DBMS_CREDENTIAL", line 30 ORA-06512: at line 2 27486. 00000 - "insufficient privileges" *Cause: An attempt was made TO perform a scheduler operation WITHOUT the required privileges. *Action: Ask a sufficiently privileged USER TO perform the requested operation, OR GRANT the required privileges TO the proper USER(s).

The second thing you have to keep in mind is the type of the credentials. With the DBMS_CLOUD you can create either OCI native credentials based on API keys or AWS ARN compatible type based on user name and the token.

SQL> SELECT * FROM user_credentials; CREDENTIAL_NAME USERNAME WINDOWS_DOMAIN COMMENTS ENABLED _____________________ _______________________________________________________________________________ _________________ ____________________________________________________________________________ __________ OBJECT_STORE_CRED1 ocid1.user.oc1..************************************************************ {"comments":"Created native credential via DBMS_CLOUD.create_credential"} TRUE OBJECT_STORE_CRED2 myuser {"comments":"Created via DBMS_CLOUD.create_credential"} TRUE SQL>

Both credentials types are valid for for the DBMS_CLOUD.PUT_OBJECT procedure.

SQL> BEGIN 2 DBMS_CLOUD.PUT_OBJECT(credential_name => 'OBJECT_STORE_CRED1', 3 object_uri => 'https://swiftobjectstorage.us-ashburn-1.oraclecloud.com/v1/mytenancy/transfer/title_episode.dmp', 4 directory_name => 'DATA_PUMP_DIR', 5 file_name => 'title_episode.dmp'); 6 END; 7* / PL/SQL PROCEDURE successfully completed. SQL> SQL> BEGIN 2 DBMS_CLOUD.PUT_OBJECT(credential_name => 'OBJECT_STORE_CRED2', 3 object_uri => 'https://swiftobjectstorage.us-ashburn-1.oraclecloud.com/v1/mytenancy/transfer/title_episode2.dmp', 4 directory_name => 'DATA_PUMP_DIR', 5 file_name => 'title_episode.dmp'); 6 END; 7* / PL/SQL PROCEDURE successfully completed. SQL>

That is great but what if we don’t have space on DBFS or want to export directly to an OCI storage bucket? And indeed we can do that by skipping the step and uploading the dump file directly to the storage bucket. What we need is the expdp utility. If the utility’s version is earlier than 19.9 it doesn’t understand the credential parameter and you have to set up default credentials for your database to access the storage bucket. If you look into the documentation you might try to use the command as it is there and get the error.

SQL> ALTER DATABASE PROPERTY SET DEFAULT_CREDENTIAL = 'OBJECT_STORE_CRED1'; Error starting at line : 1 IN command - ALTER DATABASE PROPERTY SET DEFAULT_CREDENTIAL = 'OBJECT_STORE_CRED1' Error report - ORA-44004: invalid qualified SQL name 44004. 0000 - "invalid qualified SQL name" *Document: Yes *Cause: The INPUT parameter string was NOT a valid qualified SQL name. *Action: CHECK WITH the DBMS_ASSERT spec TO verify that the parameter string IS a valid qualified SQL name. SQL>

The reason is that you have to specify the value as ‘SCHEMA_NAME.CREDENTIAL_NAME’ instead of just ‘CREDENTIAL_NAME’. Here is how it works.

SQL> ALTER DATABASE PROPERTY SET DEFAULT_CREDENTIAL = 'ADMIN.OBJECT_STORE_CRED1'; DATABASE altered. SQL>

Now we can try and export the table directly to the bucket using our credentials. I haven’t tested it with the expdp earlier than 19.9 but rather used the latest version. The destination can be either in OCI native format or in swift format. Both notations work and it will transform the path to the swift format behind the scenes. But if we are using the OCI native credentials type with API keys we are getting errors.

[opc@gleb-bastion-us ~]$ expdp admin/**********@glebatp02_tp tables=TITLE_EPISODE credential=OBJECT_STORE_CRED1 dumpfile=https://swiftobjectstorage.us-ashburn-1.oraclecloud.com/v1/mytenancy/transfer/title_episode.dmp filesize=5GB encryption_pwd_prompt=yes logfile=export.log directory=data_pump_dir EXCLUDE=statistics,index Export: Release 21.0.0.0.0 - Production on Wed Apr 13 17:49:07 2022 Version 21.3.0.0.0 Copyright (c) 1982, 2021, Oracle and/or its affiliates. All rights reserved. Connected to: Oracle Database 21c Enterprise Edition Release 21.0.0.0.0 - Production ORA-39001: invalid argument value ORA-39000: bad dump file specification ORA-31641: unable to create dump file "https://swiftobjectstorage.us-ashburn-1.oraclecloud.com/v1/mytenancy/transfer/title_episode.dmp" ORA-17502: ksfdcre:4 Failed to create file https://swiftobjectstorage.us-ashburn-1.oraclecloud.com/v1/mytenancy/transfer/title_episode.dmp ORA-17500: ODM err:ODM HTTP Not Found [opc@gleb-bastion-us ~]$

The errors complain about the path but in reality the main problem is the credentials type. It cannot work with the OCI native credentials and has to use the token based credentials. If we change the credentials to the second type it works without any problems.

[opc@gleb-bastion-us ~]$ expdp admin/********@glebatp02_tp tables=TITLE_EPISODE credential=OBJECT_STORE_CRED2 dumpfile=https://swiftobjectstorage.us-ashburn-1.oraclecloud.com/v1/mytenancy/transfer/title_episode.dmp filesize=5GB encryption_pwd_prompt=yes logfile=export.log directory=data_pump_dir EXCLUDE=statistics,index Export: Release 21.0.0.0.0 - Production on Wed Apr 13 18:05:22 2022 Version 21.3.0.0.0 Copyright (c) 1982, 2021, Oracle and/or its affiliates. All rights reserved. Connected to: Oracle Database 21c Enterprise Edition Release 21.0.0.0.0 - Production Encryption Password: Starting "ADMIN"."SYS_EXPORT_TABLE_01": admin/********@glebatp02_tp tables=TITLE_EPISODE credential=OBJECT_STORE_CRED2 dumpfile=https://swiftobjectstorage.us-ashburn-1.oraclecloud.com/v1/mytenancy/transfer/title_episode.dmp filesize=5GB encryption_pwd_prompt=yes logfile=export.log directory=data_pump_dir EXCLUDE=statistics,index Processing object type TABLE_EXPORT/TABLE/TABLE_DATA Processing object type TABLE_EXPORT/TABLE/TABLE Processing object type TABLE_EXPORT/TABLE/CONSTRAINT/CONSTRAINT . . exported "ADMIN"."TITLE_EPISODE" 1.395 GB 6635610 rows Master table "ADMIN"."SYS_EXPORT_TABLE_01" successfully loaded/unloaded ****************************************************************************** Dump file set for ADMIN.SYS_EXPORT_TABLE_01 is: https://swiftobjectstorage.us-ashburn-1.oraclecloud.com/v1/mytenancy/transfer/title_episode.dmp Job "ADMIN"."SYS_EXPORT_TABLE_01" successfully completed at Wed Apr 13 18:09:31 2022 elapsed 0 00:03:40 [opc@gleb-bastion-us ~]$

One more thing I have to mention. The export automatically split the dump to 10MiB chunks for faster upload. So, you are getting the original dump file name with size zero and multiple 10MIB chunks.

I think here I would stop and provide some summary. I think the process should be more user friendly and easier if we target Oracle Autonomous as a developer friendly environment. As for now it has too many steps and the web interface for the data pump is not useful in any way. Here is the short list of what one needs to keep in mind working with Data Pump exports on Oracle Autonomous.

- Oracle Directory uses DBFS in the users default tablespace DATA and we need to keep it in mind.

- GUI Web “Database Actions”->”Data Pump” page doesn’t provide any value.

- You can use DBMS_CLOUD.PUT_FILE to copy from the Oracle directory to your desired location.

- Use DBMS_CREDENTIALS on the dedicated exadata and DBMS_CLOUD on the shared exadata to create credentials.

- Only token based credentials work with Oracle DataPump utility.

- The file will be split to 10MiB chunks in the OCI bucket when you export directly to the bucket.