The post is about backup management for AlloyDB. It might be useful for the time when it is written but, probably, will be obsolete very soon when tools and API for the service will mature.

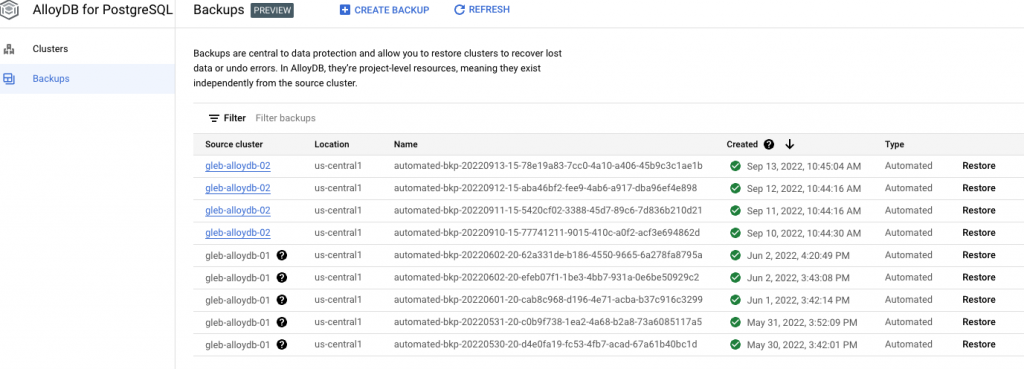

A couple of words about AlloyDB backups and how they are created. The backups are quite different from the default backups for Cloud SQL for example. As we know in Cloud SQL all the backups are bound to the instance. What it means is when the instance is deleted then all the backups disappear along with the instance. It makes sense if the backups behind the scenes are storage snapshots from the databases. But in AlloyDB all the backups are decoupled from the cluster and exist by themselves. If you delete a cluster the backups stay. I think it is a way better approach because it provides a better way to protect from some mistakes when an instance is deleted before making a clone or exporting the data. As for now you can see all the backups for existing and deleted instances using the “backups” tab in the console, gcloud utility or listing using GCP REST API.

But how can we manage the backup? What do we do if we need to implement our own retention policy or simply delete the backups? Here are a couple of ways we can do that.

The first way is the gcloud utility and it provides commands to list, create or delete backups for an AlloyDB cluster. For example, we can list the backups using command:

bastion$ gcloud beta alloydb backups list NAME STATUS CLUSTER_NAME CREATE_TIME ENCRYPTION_TYPE projects/sandbox/locations/us-central1/backups/automated-bkp-20220914-15-0b07c719-7397-408d-b4e9-779dbdb4c8cb READY projects/475708065648/locations/us-central1/clusters/gleb-alloydb-02 2022-09-14T14:44:24.087185163Z GOOGLE_DEFAULT_ENCRYPTION projects/sandbox/locations/us-central1/backups/automated-bkp-20220911-15-5420cf02-3588-45d7-89c6-7d836b210d21 READY projects/475708065648/locations/us-central1/clusters/gleb-alloydb-02 2022-09-11T14:44:16.261711844Z GOOGLE_DEFAULT_ENCRYPTION

Or we can delete a particular backup using the gcloud beta alloydb backups delete command to delete a particular backup.

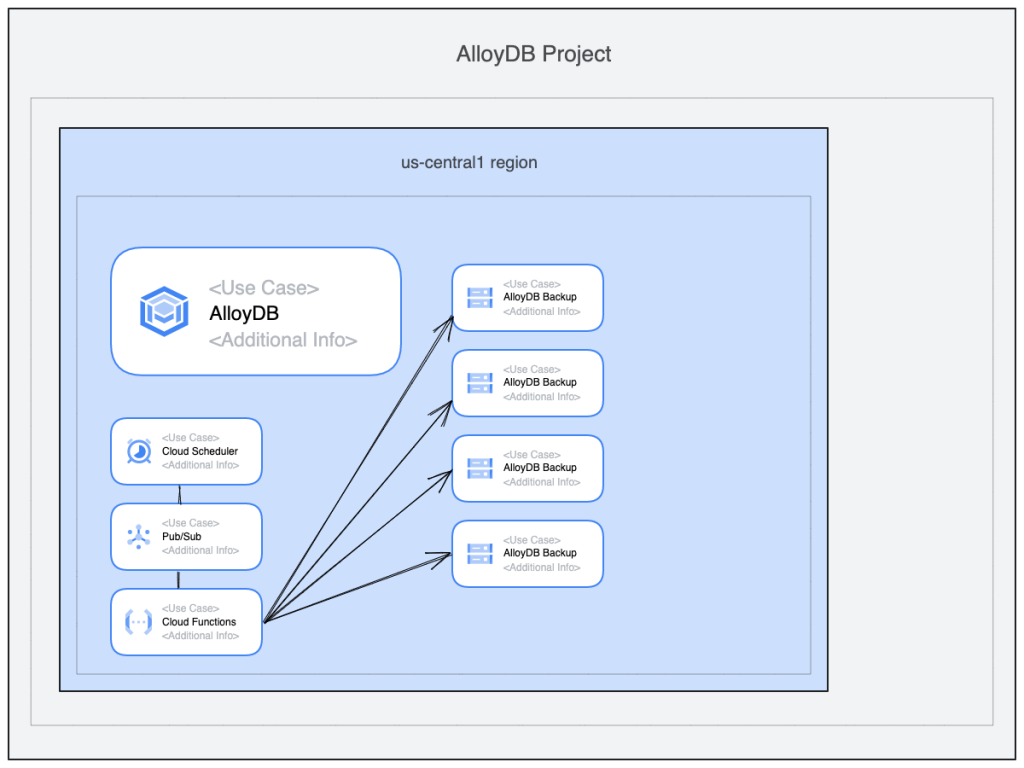

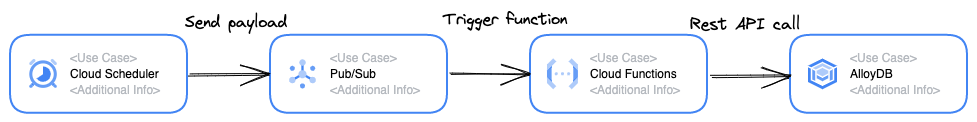

That’s great but I am lazy and prefer an automated approach. Of course you might choose to make a bash script and use gcloud there to filter and manage the backups. It might work but it is not a really scalable and reliable approach. Usually I create a function for a service using the service client’s API and trigger it by the Pub/Sub message with the call parameters. Google Scheduler is responsible to post the message to the Pub/Sub topic. Here is a basic diagram (made using the GCP diagram tool):

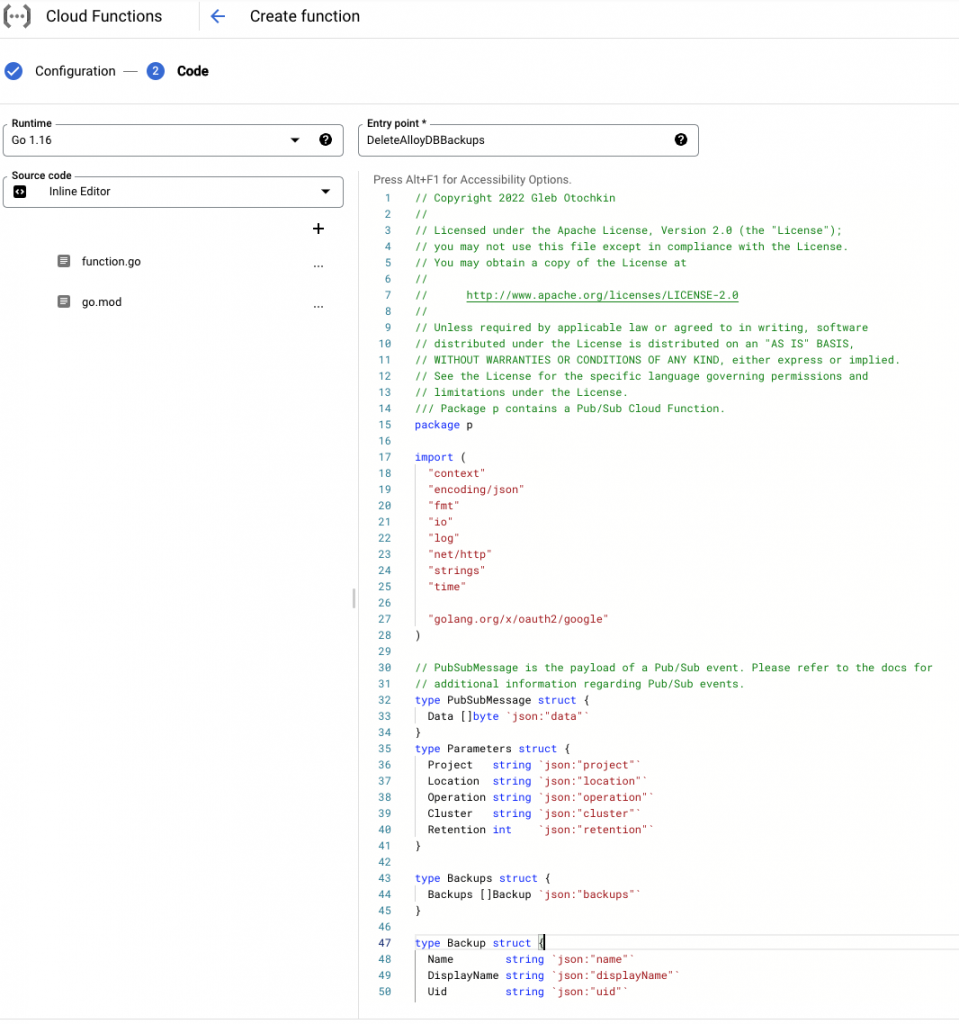

The same workflow I’ve chosen for the AlloyDB backups management. The only difference was that we didn’t have the client’s API yet and I should use the REST API interface. I prepared a function written in Go language which accepts a PubSub message with a JSON payload where I supply the AlloyDB cluster name, operation type, retention policy and location. Here is an example of the payload.

{ "project":"sandbox", "location":"us-central1", "operation":"DELETE", "cluster":"ALL", "retention":105}The function itself is written in Go and can delete backups or create them. You can specify a cluster name or put “ALL” and it will delete all backups in the project for the location according to the retention policy in days. You can find the function source code in my repository on Github.

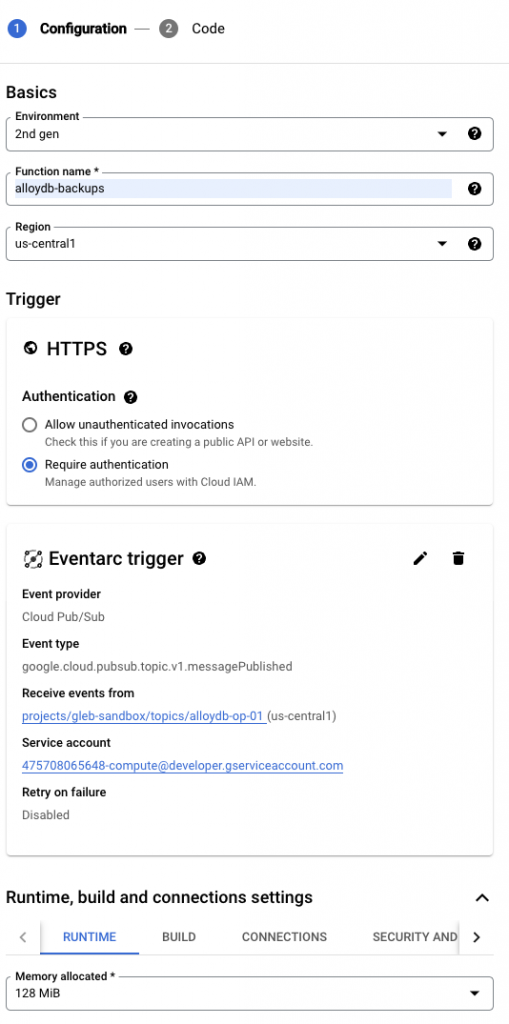

And it was my first function using the 2nd generation of GCP cloud functions. In reality I probably didn’t need any of the new features provided by the updated engine but I thought that it was the time to move and use the new generation for all the new projects.

The process was not too different from the 1st gen function but it asked me to enable some additional APIs.

I already had a Pub/Sub topic created for the AlloyDB operation and I created the function with “Eventarc trigger” end event type “Cloud Pub/Sub”:

You’ve probably also noticed that I’ve modified the runtime reducing the memory to 128Mb.

I put a timeout 120s just in case and provided the source code.

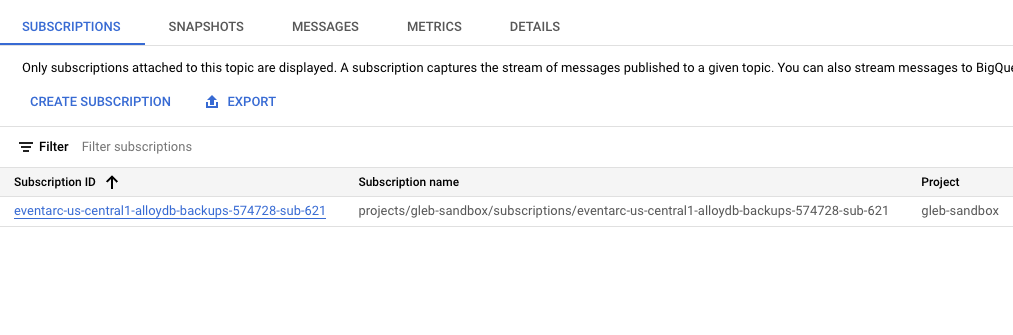

The function was built and published and I could see the new subscriber on my Pub/Sub topic:

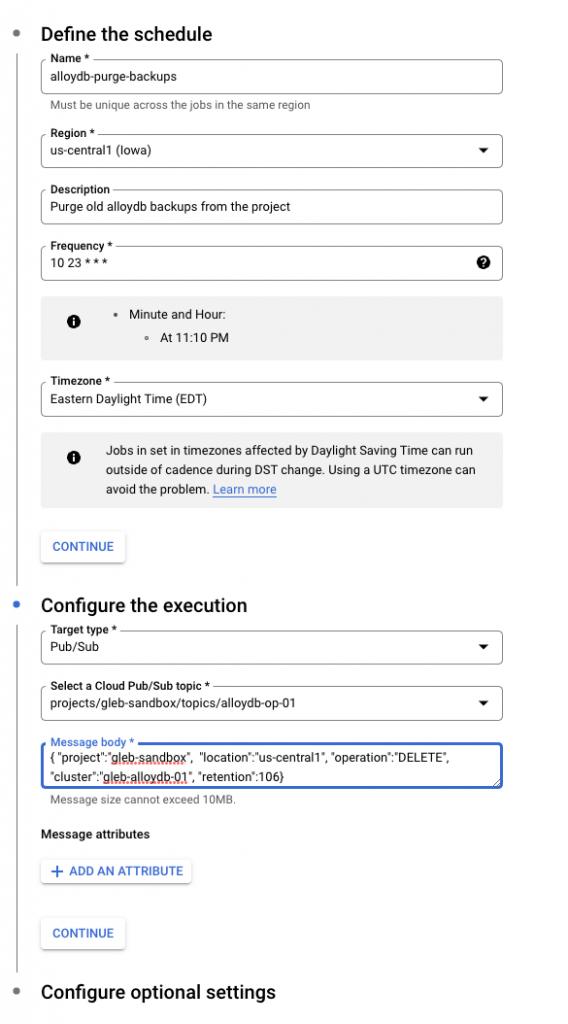

The next step was to schedule the daily run in the Cloud Scheduler to send the payload to the Pub/Sub. The procedure is simple and takes just a couple of minutes.

As a result all the backups for gleb-alloydb-01 older than 106 were scheduled to be automatically deleted from the project. The same way you can schedule creation of the on-demand backups changing the operation to “CREATE”.

I hope the post can help with some basic management automation for the new AlloyDB service. Let me know please if you would like to know more about AlloyDB or any ideas for automation.